I’ve been using AI for a bit now to boost my productivity. I find that for simple tasks with low complexity, AI inline autocomplete and AI chat (as a rubber ducky) are helpful. I don’t think AI is suitable for medium to high complexity tasks, at least not in my experience.

Over the past year I’ve been using a variety of tools (with varying models) including, but not limited to:

- Github Copilot

- Cody (AI chat)

- ChatGPT

- Claude

- Mistral

I’ve used these tools, or a combination of them, for my dialy tasks: Coding, designing, writing, etc.

However, I’ve been looking for a way to use AI on my local machine. Not because these models have extremely expensive tokens, but for the same reason I’m self-hosting a lot of my services: Privacy and control.

After some research and testing I settled to test this stack:

- Ollama: To run the AI models

- Continue.dev: For the Jetbrains IDE integration

- Jetbrains IDE: For the actual development

I’ll show you how I’ve set this up. For reference, here are my specs:

| Component | Spec |

|---|---|

| SYSTEM | Intel NUC 11 Enthusiast |

| CPU | 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80GHz |

| RAM | 64GB |

| GPU | NVIDIA RTX 2060 |

| OS | Ubuntu 24.04.1 LTS |

Ollama

Installing Ollama was trivial, head over to their download page and choose how to install it.

For me, it was a matter of running this command:

curl -fsSL https://ollama.com/install.sh | sh

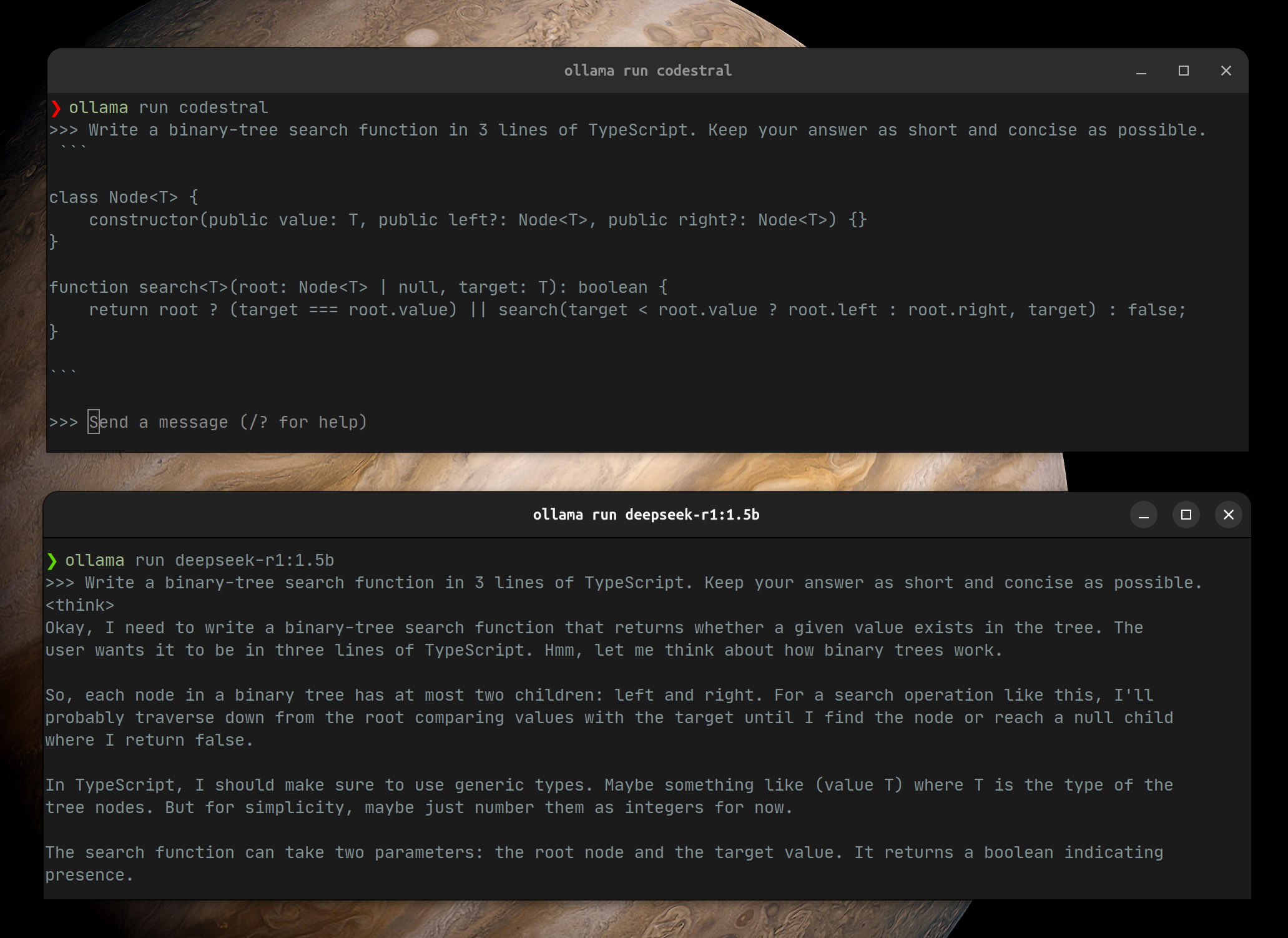

After that, I started downloaded a suitable model. I chose the codestral model, but you can easily choose another (more up-to-date) one.

ollama run codestral

Running different AI models is quite trivial. I’ve tested several models, the best performing one was deepseek-r1:1.5b, response was extremely

snappy and the thinking time was minimal.

Continue.dev & Jetbrains

For a similar integration to Github Copilot in Jetbraind/VSCode (or Zed’s AI Chat and Inline Assist), I found Continue.dev. It appealed to me because it’s open-source.

Setting it up for Jetbrains was a simple matter of installing the plugin from the Jetbrains Marketplace.

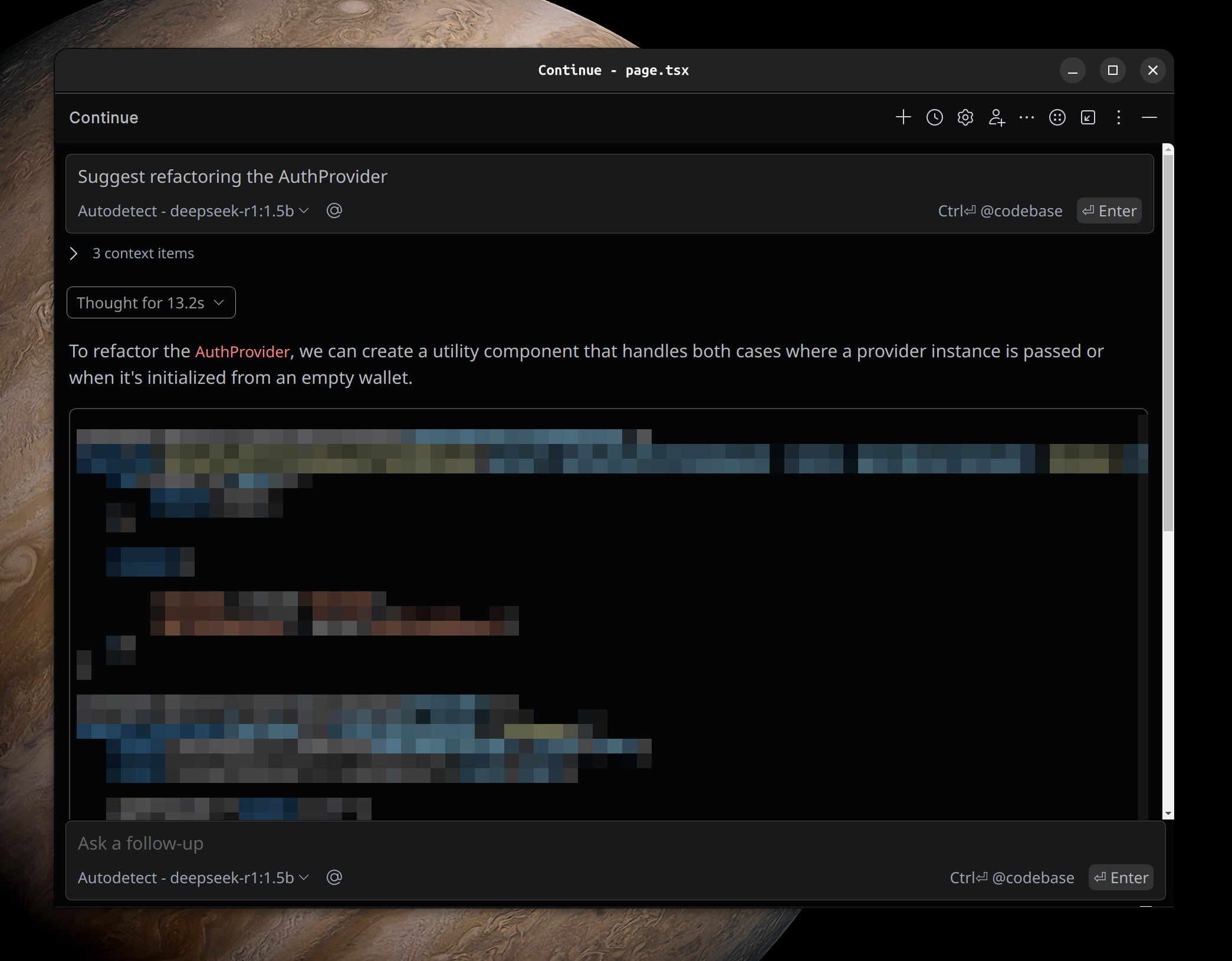

After setting that up, I was able to connect to my local Ollama instance and start using the AI models.

Impressions

I’ve been using this setup for a few days now and my impressions are mixed. The AI models are quite good (for what I use them for).

Codestral (and others I tested) are quite fast, but not fast enough for my day-to-day-tasks. I’ve also tested several smaller models, the best performing one was deepseek-r1:1.5b.

Speed of chatting with that model is much more pleasant and on-par with SaaS models.

The main thing I haven’t gotten to work properly is the inline code suggestions. I really want to use a local model for inline code suggestions, but the Ollama docs didn’t give me many pointers.

Reading context from the IDE could be done by setting up the nomic-embed-text model, like so (you’ll have to pull it with ollama run nomic-embed-text first):

"embeddingsProvider": {

"provider": "ollama",

"model": "nomic-embed-text"

},

Moving forward

I’ll continue experimenting with this setup, however my daily driver is now Github Copilot.

I am also experimenting with accessing Ollama through Open WebUI, which promises to be a decent chat-like experience with the AI models. The great thing is that it can off-load web searches to my favorite search engine, Kagi. I’ll write about that in a future post.